Revisão de incidentes: carga da base de dados, falhas em cascata e o que aprendemos - 27 de janeiro de 2026

Na terça-feira, 27 de janeiro, vivemos um incidente de produção que causou um desempenho degradado em toda a plataforma e tempo de inatividade total para alguns clientes empresariais com tipos de eventos de alto volume.

O incidente começou com uma carga elevada no banco de dados, parecia resolvido e depois revelou falhas secundárias em sistemas a montante. Esta publicação explica o que aconteceu, como confirmamos e o que mudamos como resultado.

Impacto

Utilizadores com baixo volume experienciaram elevada latência e carregamentos de página lentos

Clientes empresariais com tipos de eventos pesados experienciaram timeouts e interrupções

Mais tarde, durante o incidente, utilizadores com integrações do Google Calendar enfrentaram falhas intermitentes

O que vimos primeiro

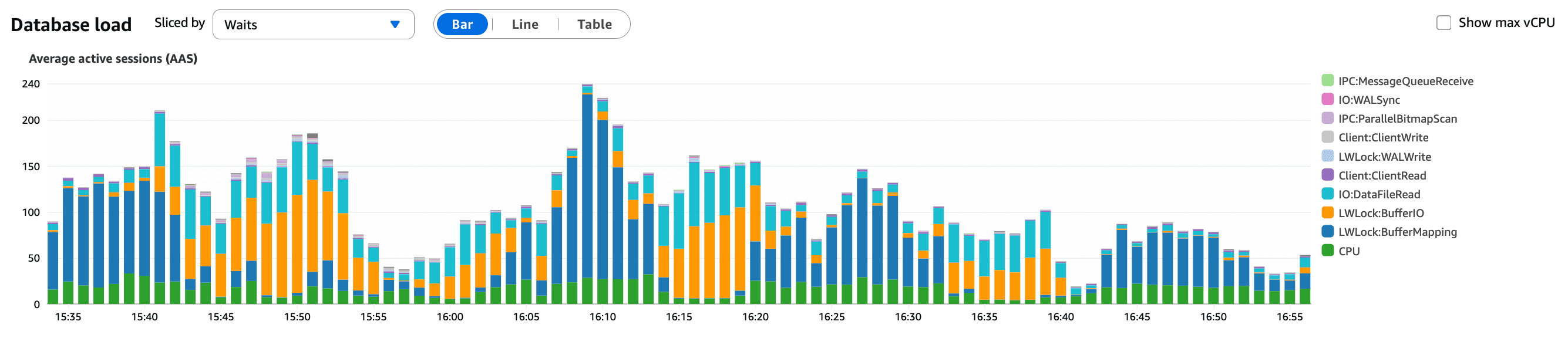

Às 15:30 UTC, alarmes automáticos foram ativados devido ao aumento da carga da base de dados. A investigação inicial mostrou um aumento nas Sessões Ativas Médias (AAS) e uma rápida elevação da latência das instruções, enquanto o tráfego total permaneceu dentro dos limites esperados.

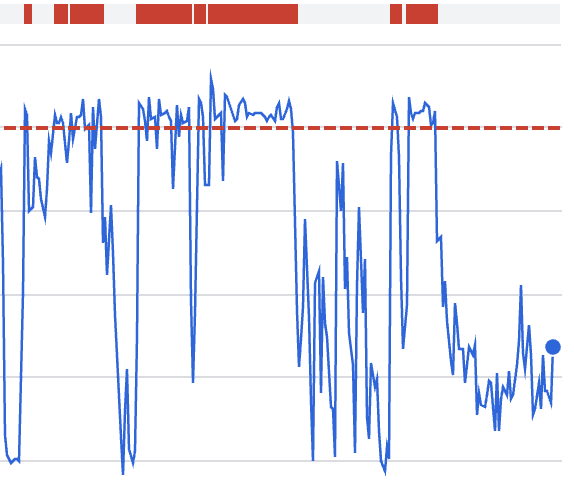

O que este gráfico mostra:

Um aumento sustentado nas sessões ativas da base de dados

Sessões acumulando em vez de serem completadas rapidamente

Um sinal forte de que as consultas estavam a tornar-se mais lentas, e não que o tráfego tinha aumentado

Isto sugeriu uma alteração na nossa aplicação em vez de um pico de tráfego, o que a análise de tráfego confirmou.

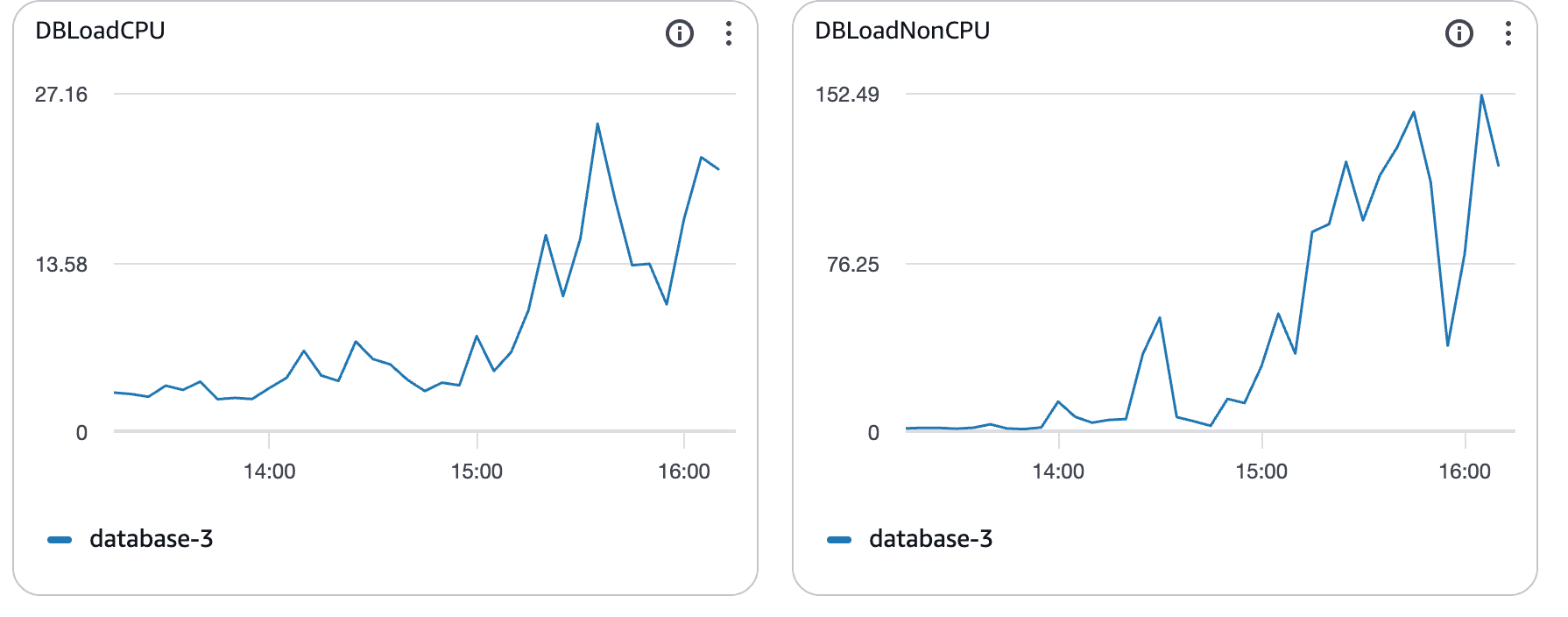

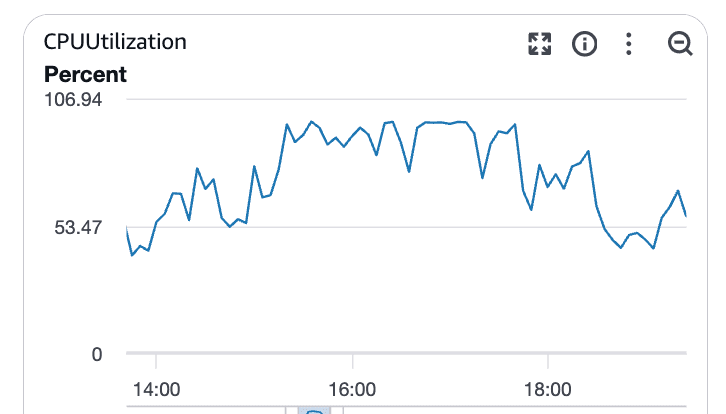

Saturação da CPU confirma o gargalo

À medida que a carga aumentou, o uso da CPU da base de dados seguiu.

O que estes gráficos mostram:

Utilização da CPU a subir bem acima da linha de base

Saturação sustentada em vez de picos curtos

Clara correlação com o aumento da latência das consultas

Neste momento, o Postgres estava limitado pela CPU. As consultas estavam a demorar mais, bloqueando outras, e a latência espalhou-se pelo sistema.

Linha do tempo (UTC)

15:30 – Alarmes automáticos para elevada carga do DB. Diagnósticos iniciais mostraram atividade elevada de

DELETEna APIv2.15:49 – Aumentámos os limites da taxa de

DELETEpara reduzir a pressão imediata.16:03 – Investigação adicional mostrou que o tráfego era normal. A carga da base de dados continuou a subir.

16:06 – Primeiros relatos de clientes empresariais incapazes de carregar tipos de eventos.

16:18 – Incidente declarado. A saturação da CPU causou um aumento da latência das instruções em todo o sistema.

17:07 – Começámos a reescrever uma consulta de alta carga para reduzir a pressão na base de dados.

17:40 – Reescrita da consulta implementada. Isto ajudou, mas não resolveu totalmente o problema.

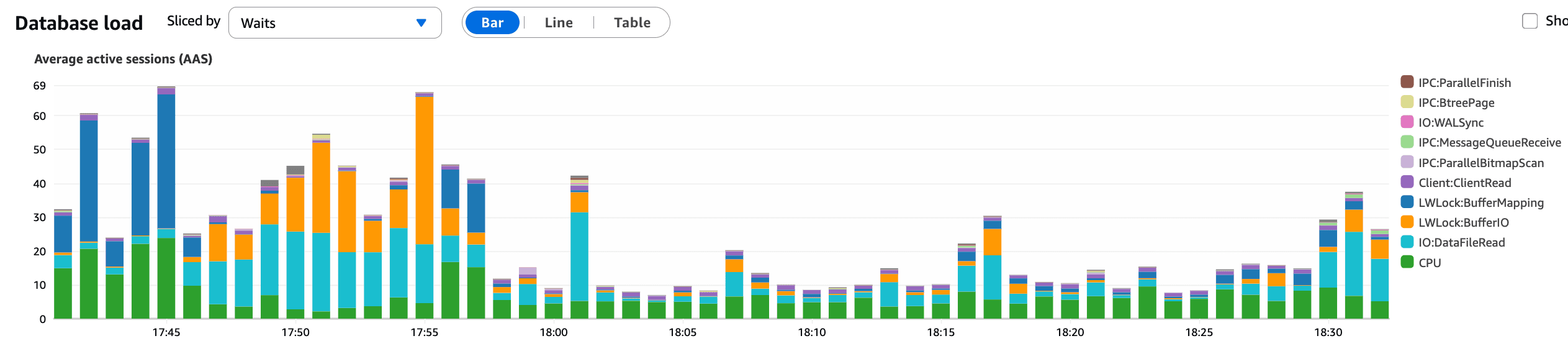

O ponto de viragem chave

Às 17:45, identificámos uma interação não intencional:

Uma funcionalidade recente lançada na segunda-feira, dia 26, introduziu a pesquisa de reservas dentro do KBar (CMD+K). Isto causou uma chamada pesada à API a ser executada em cada carregamento de página na aplicação web.

Às 17:55, a alteração do KBar foi revertida em produção.

O que este gráfico confirma:

Uma queda clara e imediata nas AAS após a reversão

Pressão das consultas da base de dados reduzida

Latência estabilizando logo depois

Isto alinha-se precisamente com o tempo da reversão e confirmou a interação do KBar como o principal desencadeador do incidente na base de dados.

18:15 – A performance da base de dados estabilizou.

O incidente primário da DB foi resolvido neste ponto.

Problemas secundários descobertos

Após a recuperação da base de dados, um subconjunto menor de utilizadores permaneceu afetado.

Utilizadores com integrações do Google Calendar enfrentaram falhas devido à exaustão de quota da API do Google. Isto foi causado pelo comportamento de repetição entre vários consumidores enquanto erros a montante estavam a ocorrer.

O que este gráfico mostra:

Consumo rápido da quota durante a janela do incidente

Correlação com o comportamento de repetição em vez de novo tráfego

18:40 – Causa raiz identificada: Lógica de repetição dos Atoms a atualizar calendários de forma agressiva.

18:46 – A funcionalidade dos Atoms foi temporariamente interrompida. Os eventos do calendário foram preenchidos e o uso de quota diminuiu.

18:54 – A performance da APIv2 permaneceu ligeiramente degradada; algum tráfego foi temporariamente redirecionado para endpoints do Vercel.

19:30 – A APIv2 e as integrações do Google estabilizaram completamente.

Mais tarde, identificámos um problema adicional contribuinte:

Uma chamada promisificada não aguardada causou falhas nos processos Node quando erros da API do Google escaparam para o escopo global.

Causa raiz

A principal causa foi uma interação inesperada entre funcionalidades:

O KBar desencadeou uma chamada pesada à API em cada carregamento de página

Esse caminho da API dependia de consultas dispendiosas à base de dados; particularmente para utilizadores de grande escala com muitas reservas.

A saturação da CPU aumentou a latência das consultas em todo o sistema

Clientes empresariais com tipos de eventos de elevado volume foram os mais impactados

Falhas secundárias (exaustão da quota do Google e instabilidade da APIv2) não foram a causa original, mas foram reveladas uma vez que o sistema estava sob stress.

O que mudámos

Desde terça-feira, fizemos:

Removida a ligação do KBar que desencadeava chamadas pesadas à API (#27314)

Lançámos várias otimizações de consultas para reduzir a carga na DB (#27364, #27319, #27309, #27315)

Mudado a paginação para

/booking/{upcoming,…}de pré-renderizada para sob demanda, reduzindo o uso da base de dados enquanto continuamos a otimizar o endpoint (#27351)Corrigida a chamada assíncrona não aguardada que causava falhas no processo Node na APIv2(#27313)

Implementadas várias salvaguardas a nível da DB para proteger a nossa base de dados durante períodos de uso extremo.

Limitadas as chamadas para os endpoints DELETE a 1 pedido por segundo (#27359)

Conclusão

Este incidente não foi causado por picos de tráfego ou falha de infraestrutura. Foi causado por uma interação inesperada de funcionalidades, com problemas em cascata devido ao comportamento de repetição a montante e um bug subtil na aplicação.

Lamentamos pela interrupção, especialmente para clientes que operam em grande escala. O incidente de terça-feira informou diretamente as melhorias sobre como lançamos funcionalidades, protegemos a nossa base de dados e lidamos com falhas em cascata.

Agradecemos pela sua paciência, e por nos manter a um padrão elevado.

Comece com o Cal.com gratuitamente hoje!

Experimente uma programação e produtividade sem interrupções, sem taxas ocultas. Registe-se em segundos e comece a simplificar a sua programação hoje, sem necessidade de cartão de crédito!