Incident review: database load, cascading failures, and what we learned - January 27, 2026

On Tuesday, January 27th, we experienced a production incident that caused degraded performance across the platform and full downtime for some enterprise customers with high-volume event types.

The incident started as elevated database load, appeared resolved, and then revealed secondary failures in downstream systems. This post explains what happened, how we confirmed it, and what we changed as a result.

Impact

Low-volume users experienced high latency and slow page loads

Enterprise customers with heavy event types experienced timeouts and downtime

Later in the incident, users with Google Calendar integrations saw intermittent failures

What we saw first

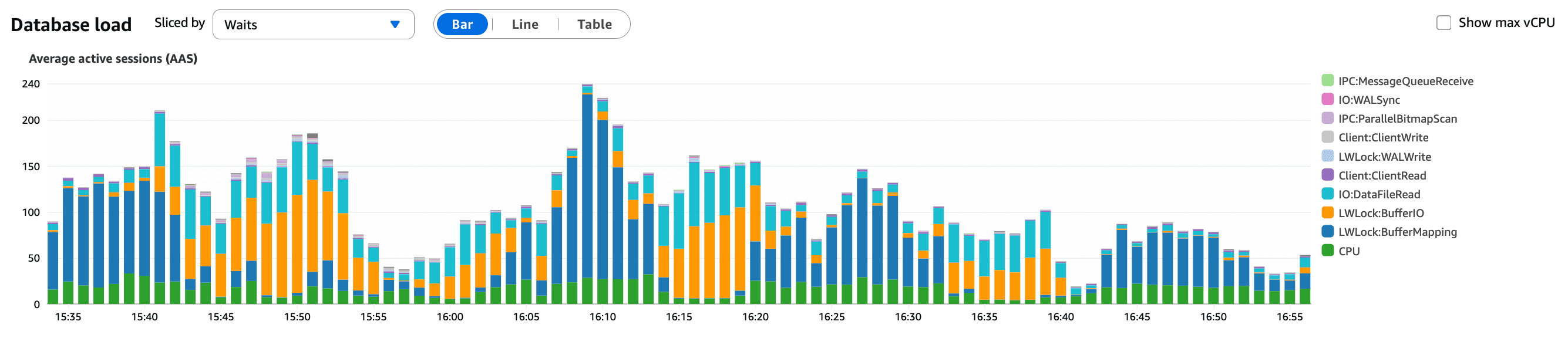

At 15:30 UTC, automated alerts triggered for elevated database load. Early investigation showed increasing Average Active Sessions (AAS) and rapidly rising statement latency, while overall traffic remained within expected bounds.

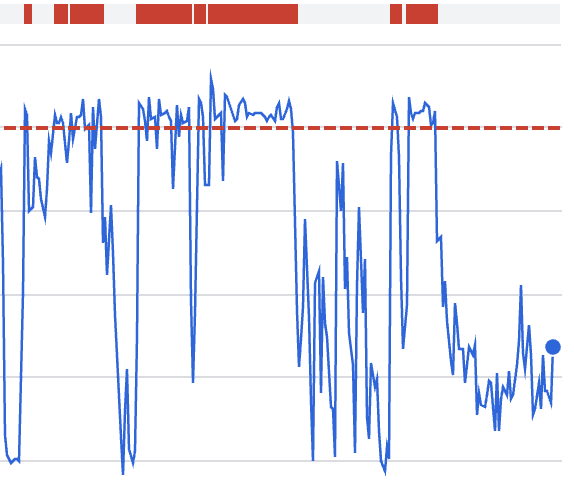

What this graph shows:

A sustained increase in active database sessions

Sessions stacking up rather than completing quickly

A strong signal that queries were becoming slower, not that traffic had increased

This suggested a change to our application rather than a traffic spike which traffic analysis confirmed.

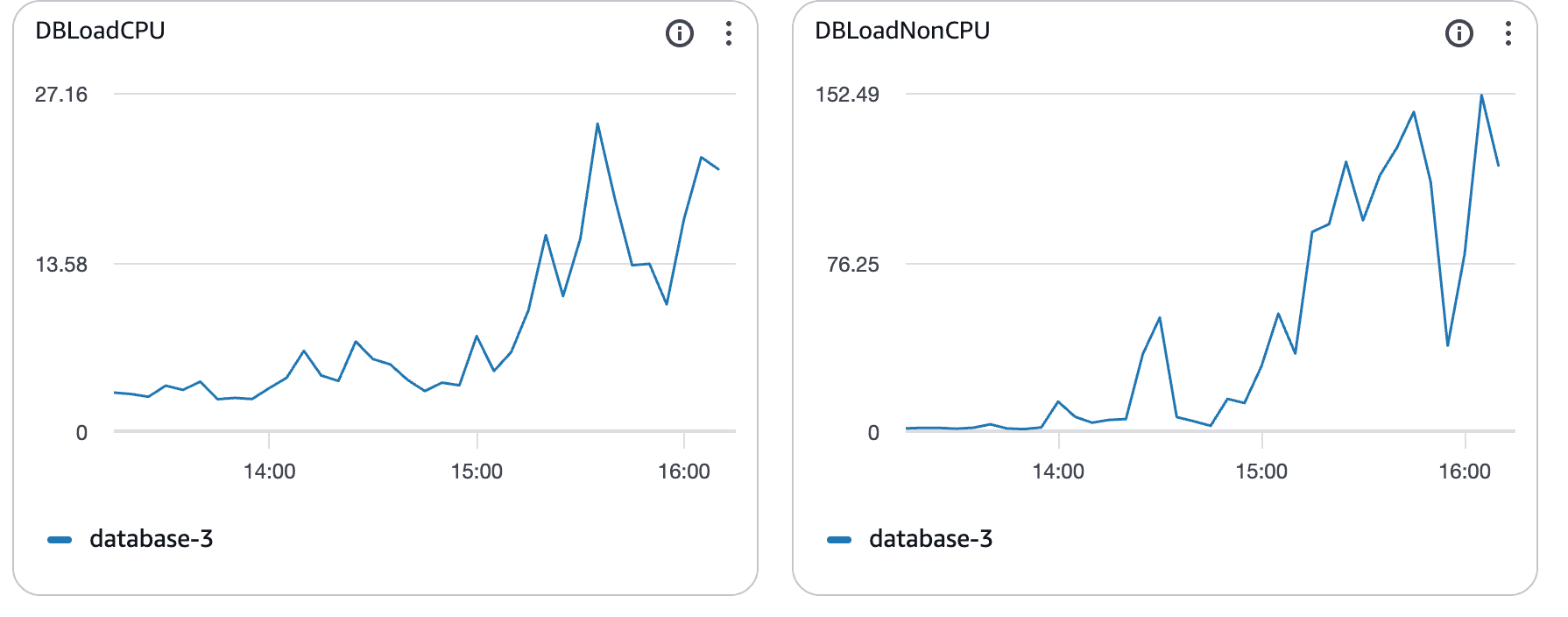

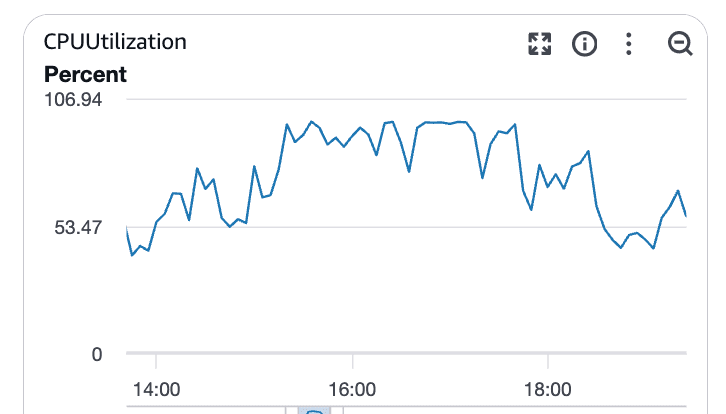

CPU saturation confirms the bottleneck

As load increased, database CPU usage followed.

What these graphs show:

CPU utilization climbing well above baseline

Sustained saturation rather than short spikes

Clear correlation with rising query latency

At this point, Postgres was CPU-bound. Queries were running longer, blocking others, and latency spread across the system.

Timeline (UTC)

15:30 – Automated alerts for high DB load. Initial diagnostics showed elevated

DELETEactivity on APIv2.15:49 – We tightened

DELETErate limits to reduce immediate pressure.16:03 – Further investigation showed traffic was normal. Database load continued rising.

16:06 – First reports from enterprise customers unable to load event types.

16:18 – Incident declared. CPU saturation caused statement latency to increase across the system.

17:07 – We began rewriting a high-load query to reduce database pressure.

17:40 – Query rewrite deployed. This helped, but did not fully resolve the issue.

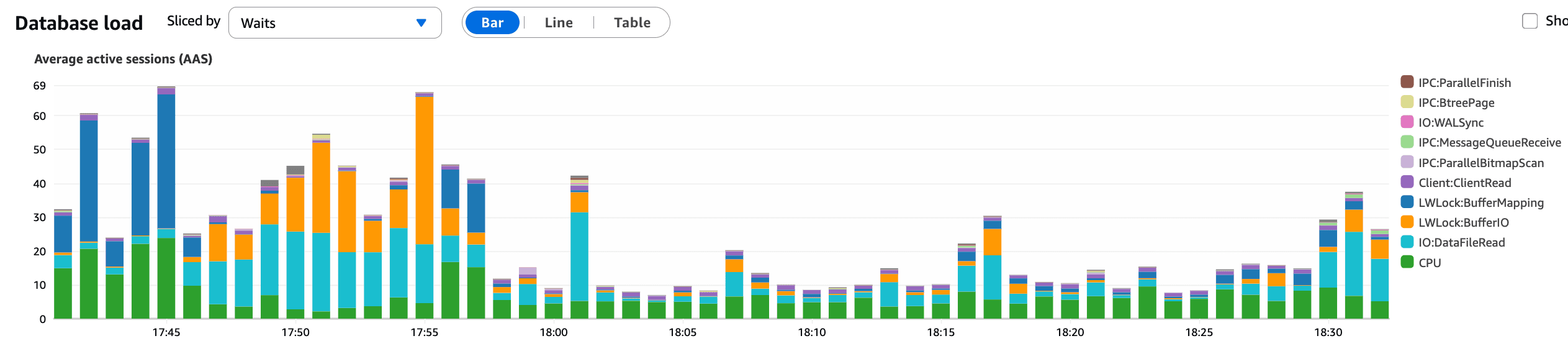

The key turning point

At 17:45, we identified an unintended interaction:

A recent feature released on Monday the 26th introduced booking search inside KBar (CMD+K). This caused a heavy API call to execute on every page load in the web app.

At 17:55, the KBar change was reverted in production.

What this graph confirms:

A clear and immediate drop in AAS after the revert

Database query pressure reduced

Latency stabilizing shortly afterward

This aligns precisely with the revert timing and confirmed the KBar interaction as the primary trigger of the database incident.

18:15 – Database performance stabilized.

The primary DB incident was resolved at this point.

Secondary issues uncovered

After the database recovered, a smaller subset of users remained affected.

Users with Google Calendar integrations experienced failures due to Google API quota exhaustion. This was caused by retry behavior across multiple consumers while upstream errors were occurring.

What this graph shows:

Rapid quota consumption during the incident window

Correlation with retry behavior rather than new traffic

18:40 – Root cause identified: Atoms retry logic aggressively refreshing calendars.

18:46 – Atoms functionality was temporarily interrupted. Calendar events were backfilled and quota usage dropped.

18:54 – APIv2 performance remained slightly degraded; some traffic was temporarily rerouted to Vercel endpoints.

19:30 – APIv2 and Google integrations fully stabilized.

Later, we identified an additional contributing issue:

An un-awaited promisified call caused Node processes to crash when Google API errors escaped into the global scope.

Root cause

The primary cause was an unexpected interaction between features:

KBar triggered a heavy API call on every page load

That API path relied on expensive database queries; particularly for large scale users with many bookings.

CPU saturation increased query latency across the system

Enterprise customers with high-volume event types were impacted most

Secondary failures (Google quota exhaustion and APIv2 instability) were not the original cause, but were revealed once the system was under stress.

What we changed

Since Tuesday, we have:

Removed the KBar coupling that triggered heavy API calls (#27314)

Shipped multiple query optimizations to reduce DB load (#27364, #27319, #27309, #27315)

Changed pagination for

/booking/{upcoming,…}from pre-rendered to on-demand, reducing database usage while we continue optimizing the endpoint (#27351)Fixed the un-awaited async call causing Node process crashes in APIv2(#27313)

Put various safeguards in place on the DB level to protect our database during periods of extreme usage.

Rate limited calls to DELETE endpoints to 1 request per second (#27359)

Closing

This incident wasn’t caused by traffic spikes or infrastructure failure. It was caused by unexpected feature interaction, with cascading issues due to downstream retry behavior and a subtle application bug.

We’re sorry for the disruption, especially for customers operating at scale. Tuesday’s incident directly informed improvements to how we ship features, protect our database, and handle cascading failures.

Thanks for your patience, and for holding us to a high standard.

Get started with Cal.com for free today!

Experience seamless scheduling and productivity with no hidden fees. Sign up in seconds and start simplifying your scheduling today, no credit card required!